Upgrading Synology NAS - Again

So I have two systems for data storage:

1. A distributed cluster of Rasberry Pi-like mini-NAS servers (Salled Qua Stations) with 1TB each that are running a modified version of Ubuntu server and synching data with ResilioSync.

2. A typical Synology NAS.

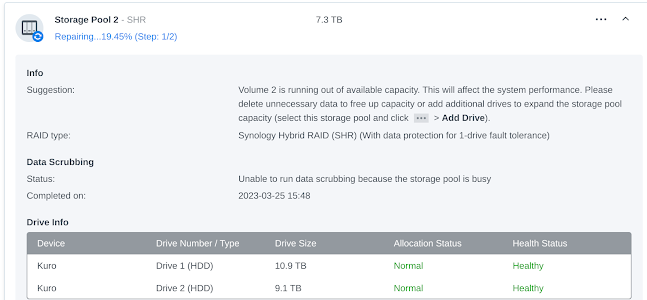

The NAS has 2 drives that are essentially mirrored using Synology's Synology Hybrid RAID. Theoretically if one drive fails, the data will remain available and working. Still, if there is a hardware error, a software error, or human error, it is possible that both drives could be corrupted and I could lose data.

Since the drives are running BTRFS, snapshots can be taken and most human error (and some software errors) can be worked around by mounting an older snapshot, this doesn't stop SATA controller issues, low level linux bugs, or something like a fire, electric surge, or water damage from destroying the data on both drives at the same time.

So, all of the data is synched to the Qua Station servers via Resilio-Sync, and resilio-sync has it's own change management system which archives old versions of files.

It's easy enough to add a new Qua Station, as they are very cheap and I have the process perfected by now. If one breaks, it's also easy enough to just reformat it and resync it in most cases.

But how about the NAS?

Often you will see recommendations that the drives used in NAS systems should be not only the same size, but the same make and model. Why? Well one reason is that if you have one drive that has better performance, then in many cases you will only get the performance of the worst drive in the array.

There is an issue with using all drives of the same make and model, which is that they will have a strong tendancy to fail around the same time. (The infamous "bathtub curve").

Also, even if one drive has failed and the other is still working, the situation is quite fragile.

For example, say you have a 2 drive RAID, with drives A and B of the same size, make, and model that were purchased at the same time. If drive B fails, and you insert drive C to repair the RAID array, then drive A which is probably going to fail soon will be used to repair drive C. Drive A will be read from continuously for many hours in order to get all of the data synched - which may hasten the failure!

To avoid this situation, I do two things:

1. I use different model drives

2. I replace them well before they are likely to die

But using different model drives also leads to another benefit, it means that you can easily increase your available storage space.

An example:

Scenario 1:

- You have two drives, A and B, both are 8 TB, and you are using them in a mirrored array.

- When A fails, you replace it with another 8 TB drive.

- You stll have only 8 TB available.

Scenario 2:

- You have two drives, A and B, both are 8 TB, and you are using them in a mirrored array.

- When A fails, you replace it with a 10 TB drive.

- You stll have only 8 TB available. (because the volume will only be the size of the smaller drive).

Scenario 3:

- You have two drives, A and B, both are 8 TB, and you are using them in a mirrored array.

- When A fails, you replace it with a 10 TB drive, and then immediatly replace B with a 10TB drive as soon as the array is done repairing.

- You will have 10TB available, but you need to wait for a repair twice, and you need to buy two drives.

Scenario 4:

- You have two drives, A and B, A is 8 TB and B is 10 TB, and you are using them in a mirrored array.

- Drive A hasn't failed, but you decide to replace it with a 12 TB drive.

- The storage volume size was 8 TB, but upon replacing the 8 TB drive with the the 12 TB, your volume size can be expanded to 10 TB.

- Next time, in a year or so, you replace drive B (10 TB) with a 14 TB drive, and your capacity will expand from 10TB to 12 TB.

- Log into DSM and launch the storage manager

- Go to the HDD/SSD section, select the drive you want to remove and select "Deactivate Drive". Confirm the ominous warnings.

- The drive you have deactivated will blink, and the NAS will start beeping. Unlock the drive sled and remove the drive.

- Pop the old drive out of the drive sled and pop the new one in, and slide it into the NAS.

- A dialog will appear in DSM asing what to do about the new drive. Select the option to repair an existing volume.

- You will be asked if you want to expand the volume if the new drive is larger than the one being replaced. I select this option.

- The repair will begin. It can take many hours with a large drive that is mostly full.

Comments

Post a Comment