Upgrading Storage Pool in my Synology NAS

To some extent, a NAS is just a fancy linux server, one which many technophiles believe is vastly overpriced. After all, any bog standard PC with even reasonably low specs can run Linux, run file sharing, and host a few hard drives, right?

Well yes, but NAS can also do the following:

1. Come with their own backup, cloud storage sync, and and device sync software.

2. Come with dedicated mobile apps

3. Come with slick web based management interfaces

4. Support a wide variety of built-in apps, all of which are sized and certified to work, issue free, without excessive tweaking, and using the same login accounts

5. Run while consuming a lot less energy than an old PC

6. Hardware support for hot-swapping SATA or SCSI drives.

7. Software support for snapshots, RAID pool maintenance, disaster recovery, etc.

It's #5-7 we will be interested in today. When I bought my NAS, I installed an 8TB drive. The drive wasn't technically NAS grade. It achieves its 8TB via a teqhnique known as "Shingling" - which means the tracks overlap. To explain it very simply, it can achieve high capacity at low cost, but in exchange the write speed it slower then a "normal" non-shingling drive.

The price was right, and it worked, so I wasn't going to complain. My use cases mainly involve synching to and from the NAS using Resilio-sync, so the write speed isn't nearly as important to me as it would be to someone who wanted to edit video directly off of the NAS or something like that.

A while later, I decided that I wanted to mirror to a second drive, and I attached the largest other drive I had at the time, which was 3TB. This meant I had to move things off of the NAS reduce the size of the pool down to less than 2.7TB (because the 3TB is marketing TB, not computer science TB), and then add the "new" drive.

I moved the data off to a set of micro-servers, called QuaStations. You can read about them on other entries here. While that works fine, my fleet of QuaStations has grown to around 20 servers, and well less than half of total storage is on the NAS. So, every directory I have synched exists on at least two QuaStations, except for a few which exist on a QuaStation and the NAS.

For various reasons, I want to move to a model where everything (important, at least) exists on the NAS, and on a single QuaStation. From the point of view of my computers, nothing will change, every folder will still be synched from at least two devices, but managing the QuaStations will be easier, and transfers will be faster since the NAS has dual ethernet ports.

The problem is that 2.6TB drive. Drive sizes have increased, to the point that 18TB drives are now available in the shops - but they are almost $400 even used. In order to get 18TB capacity, I would have to replace *both* drives, meaning I would need to spend over $800. Suddenly sticking with my current QuaStation solution doesn't seem so bad!

On the other hand, just buying another 8TB drive would unlock the full 8TB of my existing drive while still keeping a mirror. If I could buy a slightly larger drive, say 10TB or 12TB, it would mean that next time I upgrade the 8TB drive, I would also be able to unlock more than 8TB.

I found the sweet spot in pricing to be 10TB, as I was able to get a real NAS drive (a Seagate IronWolf) drive for $170. In practical terms, 10TB is only about 9TB converting from marketing to real numbers, and accounting for some administrative space usage. Worse than that, I won't be able to use the entire 10TB, since the remaining drive will smaller than that Still, swapping the 3TB drive for a 10TB drive, my usabel storage will go from 2.6TB to over 7TB, meaning I will gain over 4.4TB.

If I also upgrade again to a drive larger than 10TB a few months down the road, then my storage would jump around 2TB again.

Normally swapping out a drive in a raid pool would be a stressful experience for two reasons:

1. When using Linux manually, you want to be very sure you are doing everything correctly. You have block devices, filesystems, RAID devices, and the associated configuration. Normally you would have to deactivate a drive, activate a new drive, restore the RAID with the new drive, grow the block device, and then grow the filesystem. All in the right order without any typos. None of this is rocket science, but for most people it will be something they do at most every few months. The steps will be different depending what distribution, volume manager, and filesystem they use. If they are using BTRFS or ZFS, then they may or may not be using the native RAID. Any mistakes will cost you your data or lead to many hours of unpleasentness. With BTRFS, it seems that you could just add a new drive, re-balance to get a copy of everything onto that drive, then tell BTRFS to remove the old one - but then you need to have the ability to handle at least 3 drives (at least temporarily).

2. If your hardware doesn't support hot swap, you need to shut down to replace the drive. Moreover, with a standard PC, you may need to take it apart to even get to the drive. Do you even remember which drive is which?

Luckily, since I am using a Synology NAS, the process is easier than I even expected it would be.

I followed the steps outlines below:

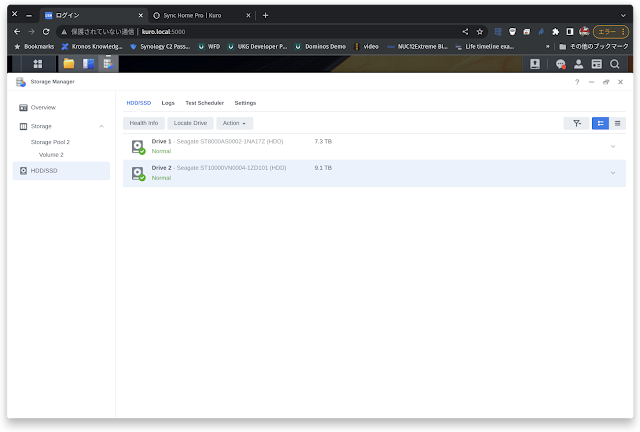

- Log into the NAS and open storage manager

- Go to the "HDD/SSD" section, and right-click on the smaller drive. Select to disable it.

- After a warning and request for the admin password, the drive is deactivated, and the SHR RAID is changed to degrated status. The software informs me to look for the drive with the orange light.

- Sure enough, the LED on one of the drive bays has changed color to orange. I pull it out, and check the drive to confirm that it is the correct one. (It says 3TB on the label).

- I remove the 3TB drive from the drive sled, and then clean the sled with alcohol since it has been in the NAS for years collecting dust.

- I insert the new 10TB Seagate drive into the drive sled, and slide it into the NAS.

- I go back to the computer and check the "Storage Manager" again, where it is happily showing the drive in the "HDD/SSD" section, and check the "Health Info".

- Since there are no issues with the drive health, I add it to the array. It asks me if I want to increase the array size at the same time. Of course!

Comments

Post a Comment